03

Apr

Azure Maia for the era of AI: From silicon to software to systems

As the pace of AI and the transformation it enables across industries continues to accelerate, Microsoft is committed to building and enhancing our global cloud infrastructure to meet the needs from customers and developers with faster, more performant, and more efficient compute and AI solutions. Azure AI infrastructure comprises technology from industry leaders as well as Microsoft’s own innovations, including Azure Maia 100, Microsoft’s first in-house AI accelerator, announced in November. In this blog, we will dive deeper into the technology and journey of developing Azure Maia 100, the co-design of hardware and software from the ground up, built to run cloud-based AI workloads and optimized for Azure AI infrastructure.

Azure Maia 100, pushing the boundaries of semiconductor innovation

Maia 100 was designed to run cloud-based AI workloads, and the design of the chip was informed by Microsoft’s experience in running complex and large-scale AI workloads such as Microsoft Copilot. Maia 100 is one of the largest processors made on 5nm node using advanced packaging technology from TSMC.

Through collaboration with Azure customers and leaders in the semiconductor ecosystem, such as foundry and EDA partners, we will continue to apply real-world workload requirements to our silicon design, optimizing the entire stack from silicon to service, and delivering the best technology to our customers to empower them to achieve more.

End-to-end systems optimization, designed for scalability and sustainability

When developing the architecture for the Azure Maia AI accelerator series, Microsoft reimagined the end-to-end stack so that our systems could handle frontier models more efficiently and in less time. AI workloads demand infrastructure that is dramatically different from other cloud compute workloads, requiring increased power, cooling, and networking capability. Maia 100’s custom rack-level power distribution and management integrates with Azure infrastructure to achieve dynamic power optimization. Maia 100 servers are designed with a fully-custom, Ethernet-based network protocol with aggregate bandwidth of 4.8 terabits per accelerator to enable better scaling and end-to-end workload performance.

When we developed Maia 100, we also built a dedicated “sidekick” to match the thermal profile of the chip and added rack-level, closed-loop liquid cooling to Maia 100 accelerators and their host CPUs to achieve higher efficiency. This architecture allows us to bring Maia 100 systems into our existing datacenter infrastructure, and to fit more servers into these facilities, all within our existing footprint. The Maia 100 sidekicks are also built and manufactured to meet our zero waste commitment.

Co-optimizing hardware and software from the ground up with the open-source ecosystem

From the start, transparency and collaborative advancement have been core tenets in our design philosophy as we build and develop Microsoft’s cloud infrastructure for compute and AI. Collaboration enables faster iterative development across the industry—and on the Maia 100 platform, we’ve cultivated an open community mindset from algorithmic data types to software to hardware.

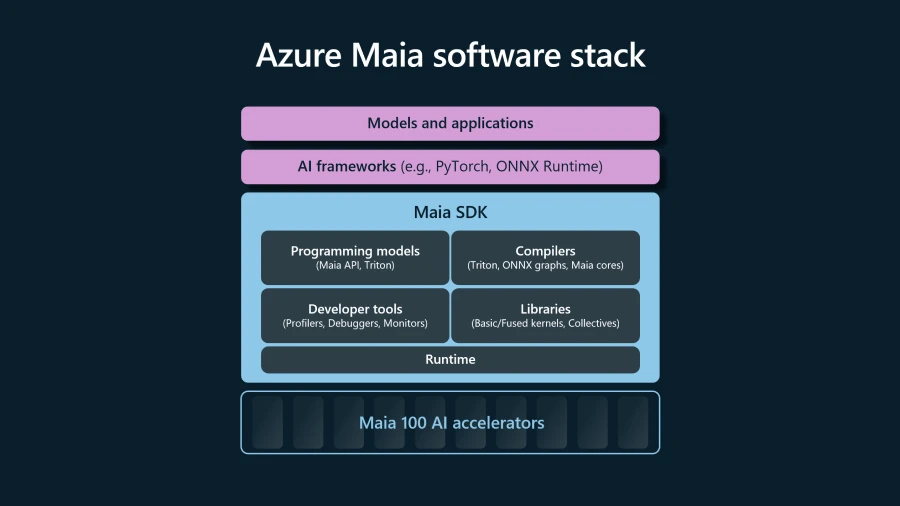

To make it easy to develop AI models on Azure AI infrastructure, Microsoft is creating the software for Maia 100 that integrates with popular open-source frameworks like PyTorch and ONNX Runtime. The software stack provides rich and comprehensive libraries, compilers, and tools to equip data scientists and developers to successfully run their models on Maia 100.

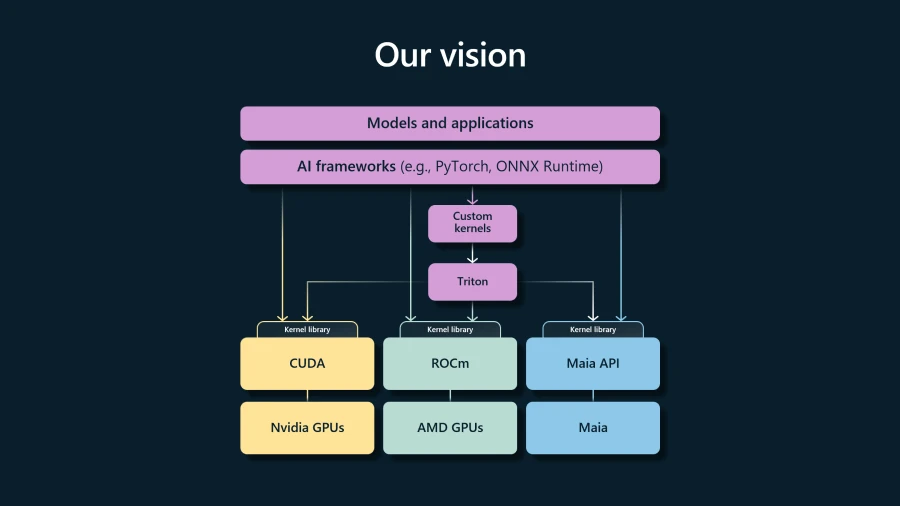

To optimize workload performance, AI hardware typically requires development of custom kernels that are silicon-specific. We envision seamless interoperability among AI accelerators in Azure, so we have integrated Triton from OpenAI. Triton is an open-source programming language that simplifies kernel authoring by abstracting the underlying hardware. This will empower developers with complete portability and flexibility without sacrificing efficiency and the ability to target AI workloads.

Maia 100 is also the first implementation of the Microscaling (MX) data format, an industry-standardized data format that leads to faster model training and inferencing times. Microsoft has partnered with AMD, ARM, Intel, Meta, NVIDIA, and Qualcomm to release the v1.0 MX specification through the Open Compute Project community so that the entire AI ecosystem can benefit from these algorithmic improvements.

Azure Maia 100 is a unique innovation combining state-of-the-art silicon packaging techniques, ultra-high-bandwidth networking design, modern cooling and power management, and algorithmic co-design of hardware with software. We look forward to continuing to advance our goal of making AI real by introducing more silicon, systems, and software innovations into our datacenters globally.

Learn more

- Read the announcement: With a systems approach to chips, Microsoft aims to tailor everything ‘from silicon to service’ to meet AI demand.

- Watch Satya Nadella’s keynote at Ignite 2023: AI Infrastructure: Satya Nadella at Microsoft Ignite 2023.

- Watch a demo of GitHub Copilot running on Azure Maia 100: Inside Microsoft AI innovations with Mark Russinovich.

- Learn more about Azure AI Infrastructure.

- Learn more about Azure AI.

The post Azure Maia for the era of AI: From silicon to software to systems appeared first on Microsoft Azure Blog.