06

Nov

Building for the future: The enterprise generative AI application lifecycle with Azure AI

In our previous blog, we explored the emerging practice of large language model operations (LLMOps) and the nuances that set it apart from traditional machine learning operations (MLOps). We discussed the challenges of scaling large language model-powered applications and how Microsoft Azure AI uniquely helps organizations manage this complexity. We touched on the importance of considering the development journey as an iterative process to achieve a quality application.

In this blog, we’ll explore these concepts in more detail. The enterprise development process requires collaboration, diligent evaluation, risk management, and scaled deployment. By providing a robust suite of capabilities supporting these challenges, Azure AI affords a clear and efficient path to generating value in your products for your customers.

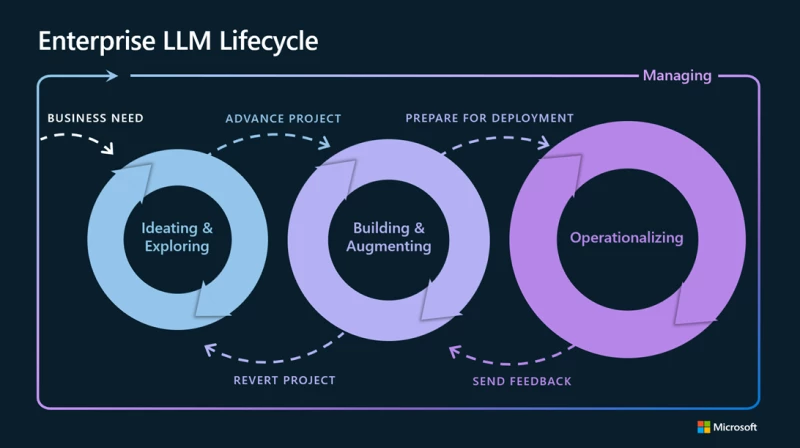

Enterprise LLM Lifecycle

Ideating and exploring loop

The first loop typically involves a single developer searching for a model catalog for large language models (LLMs) that align with their specific business requirements. Working with a subset of data and prompts, the developer will try to understand the capabilities and limitations of each model with prototyping and evaluation. Developers usually explore altering prompts to the models, different chunking sizes and vectoring indexing methods, and basic interactions while trying to validate or refute business hypotheses. For instance, in a customer support scenario, they might input sample customer queries to see if the model generates appropriate and helpful responses. They can validate this first by typing in examples, but quickly move to bulk testing with files and automated metrics.

Explore

Azure OpenAI Service

Beyond Azure OpenAI Service, Azure AI offers a comprehensive model catalog, which empowers users to discover, customize, evaluate, and deploy foundation models from leading providers such as Hugging Face, Meta, and OpenAI. This helps developers find and select optimal foundation models for their specific use case. Developers can quickly test and evaluate models using their own data to see how the pre-trained model would perform for their desired scenarios.

Building and augmenting loop

Once a developer discovers and evaluates the core capabilities of their preferred LLM, they advance to the next loop which focuses on guiding and enhancing the LLM to better meet their specific needs. Traditionally, a base model is trained with point-in-time data. However, often the scenario requires either enterprise-local data, real-time data, or more fundamental alterations.

For reasoning on enterprise data, Retrieval Augmented Generation (RAG) is preferred, which injects information from internal data sources into the prompt based on the specific user request. Common sources are document search systems, structured databases, and non-SQL stores. With RAG, a developer can “ground” their solution using the capabilities of their LLMs to process and generate responses based on this injected data. This helps developers achieve customized solutions while maintaining relevance and optimizing costs. RAG also facilitates continuous data updates without the need for fine-tuning as the data comes from other sources.

During this loop, the developer may find cases where the output accuracy doesn’t meet desired thresholds. Another method to alter the outcome of an LLM is fine-tuning. Fine-tuning helps most when the nature of the system needs to be altered. Generally, the LLM will answer any prompt in a similar tone and format. But for example, if the use case requires code output, JSON, or any such modification, there may be a consistent change or restriction in the output, where fine-tuning can be employed to better align the system’s responses with the specific requirements of the task at hand. By adjusting the parameters of the LLM during fine-tuning, the developer can significantly improve the output accuracy and relevance, making the system more useful and efficient for the intended use case.

It is also feasible to combine prompt engineering, RAG augmentation, and a fine-tuned LLM. Since fine-tuning necessitates additional data, most users initiate with prompt engineering and modifications to data retrieval before proceeding to fine-tune the model.

Most importantly, continuous evaluation is an essential element of this loop. During this phase, developers assess the quality and overall groundedness of their LLMs. The end goal is to facilitate safe, responsible, and data-driven insights to inform decision-making while ensuring the AI solutions are primed for production.

Learn More

Azure AI prompt flow

Azure AI prompt flow is a pivotal component in this loop. Prompt flow helps teams streamline the development and evaluation of LLM applications by providing tools for systematic experimentation and a rich array of built-in templates and metrics. This ensures a structured and informed approach to LLM refinement. Developers can also effortlessly integrate with frameworks like LangChain or Semantic Kernel, tailoring their LLM flows based on their business requirements. The addition of reusable Python tools enhances data processing capabilities, while simplified and secure connections to APIs and external data sources afford flexible augmentation of the solution. Developers can also use multiple LLMs as part of their workflow, applied dynamically or conditionally to work on specific tasks and manage costs.

With Azure AI, evaluating the effectiveness of different development approaches becomes straightforward. Developers can easily craft and compare the performance of prompt variants against sample data, using insightful metrics such as groundedness, fluency, and coherence. In essence, throughout this loop, prompt flow is the linchpin, bridging the gap between innovative ideas and tangible AI solutions.

Operationalizing loop

The third loop captures the transition of LLMs from development to production. This loop primarily involves deployment, monitoring, incorporating content safety systems, and integrating with CI/CD (continuous integration and continuous deployment) processes. This stage of the process is often managed by production engineers who have existing processes for application deployment. Central to this stage is collaboration, facilitating a smooth handoff of assets between application developers and data scientists building on the LLMs, and production engineers tasked with deploying them.

Deployment allows for a seamless transfer of LLMs and prompt flows to endpoints for inference without the need for a complex infrastructure setup. Monitoring helps teams track and optimize their LLM application’s safety and quality in production. Content safety systems help detect and mitigate misuse and unwanted content, both on the ingress and egress of the application. Combined, these systems fortify the application against potential risks, improving alignment with risk, governance, and compliance standards.

Unlike traditional machine learning models that might classify content, LLMs fundamentally generate content. This content often powers end-user-facing experiences like chatbots, with the integration often falling on developers who may not have experience managing probabilistic models. LLM-based applications often incorporate agents and plugins to enhance the capabilities of models to trigger some actions, which could also amplify the risk. These factors, combined with the inherent variability of LLM outputs, show the importance of risk management in LLMOps is critical.

Explore

Azure AI Content Safety

Azure AI prompt flow ensures a smooth deployment process to managed online endpoints in Azure Machine Learning. Because prompt flows are well-defined files that adhere to published schemas, they are easily incorporated into existing productization pipelines. Upon deployment, Azure Machine Learning invokes the model data collector, which autonomously gathers production data. That way, monitoring capabilities in Azure AI can provide a granular understanding of resource utilization, ensuring optimal performance and cost-effectiveness through token usage and cost monitoring. More importantly, customers can monitor their generative AI applications for quality and safety in production, using scheduled drift detection using either built-in or customer-defined metrics. Developers can also use Azure AI Content Safety to detect and mitigate harmful content or use the built-in content safety filters provided with Azure OpenAI Service models. Together, these systems provide greater control, quality, and transparency, delivering AI solutions that are safer, more efficient, and more easily meet the organization’s compliance standards.

Azure AI also helps to foster closer collaboration among diverse roles by facilitating the seamless sharing of assets like models, prompts, data, and experiment results using registries. Assets crafted in one workspace can be effortlessly discovered in another, ensuring a fluid handoff of LLMs and prompts. This not only enables a smoother development process but also preserves the lineage across both development and production environments. This integrated approach ensures that LLM applications are not only effective and insightful but also deeply ingrained within the business fabric, delivering unmatched value.

Managing loop

The final loop in the Enterprise Lifecycle LLM process lays down a structured framework for ongoing governance, management, and security. AI governance can help organizations accelerate their AI adoption and innovation by providing clear and consistent guidelines, processes, and standards for their AI projects.

Explore

Responsible AI practices

Azure AI provides built-in AI governance capabilities for privacy, security, compliance, and responsible AI, as well as extensive connectors and integrations to simplify AI governance across your data estate. For example, administrators can set policies to allow or enforce specific security configurations, such as whether your Azure Machine Learning workspace uses a private endpoint. Or, organizations can integrate Azure Machine Learning workspaces with Microsoft Purview to publish metadata on AI assets automatically to the Purview Data Map for easier lineage tracking. This helps risk and compliance professionals understand what data is used to train AI models, how base models are fine-tuned or extended, and where models are used across different production applications. This information is crucial for supporting responsible AI practices and providing evidence for compliance reports and audits.

Whether building generative AI applications with open-source models, Azure’s managed OpenAI models, or your own pre-trained custom models, Azure AI facilitates safe, secure, and reliable AI solutions with greater ease with purpose-built, scalable infrastructure.

Explore the harmonized journey of LLMOps at Microsoft Ignite

As organizations delve deeper into LLMOps to streamline processes, one truth becomes abundantly clear: the journey is multifaceted and requires a diverse range of skills. While tools and technologies like Azure AI prompt flow play a crucial role, the human element—and diverse expertise—is indispensable. It’s the harmonious collaboration of cross-functional teams that creates real magic. Together, they ensure the transformation of a promising idea into a proof of concept and then a game-changing LLM application.

As we approach our annual Microsoft Ignite conference this month, we will continue to post updates to our product line. Join us for more groundbreaking announcements and demonstrations and stay tuned for our next blog in this series.

The post Building for the future: The enterprise generative AI application lifecycle with Azure AI appeared first on Azure Blog.