04

Dec

The seven pillars of modern AI development: Leaning into the era of custom copilots

In an era where technology is rapidly advancing and information consumption is exponentially growing, there are many new opportunities for businesses to manage, retrieve, and utilize knowledge. The integration of generative AI (content creation by AI) and knowledge retrieval mechanisms is revolutionizing knowledge management, making it more dynamic and readily available. Generative AI offers businesses more efficient ways to capture and retrieve institutional knowledge, improving user productivity by reducing time spent looking for information

This business transformation was enabled by copilots. Azure AI Studio is the place for AI Developers to build custom copilot experiences.

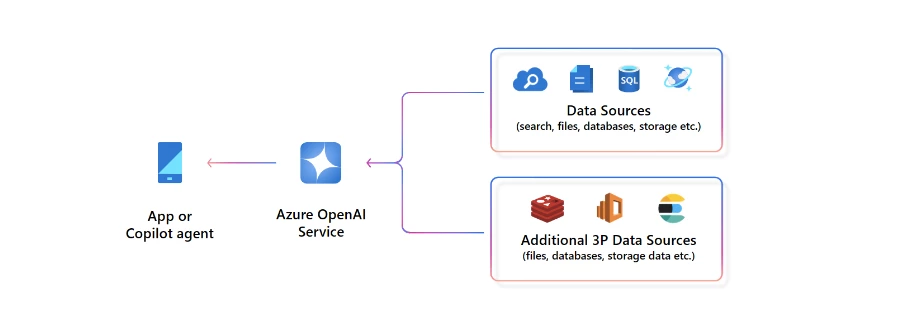

Copilots infuse data with large language models (LLM) to improve the response generation process. This process can be described as follows: the system receives a query (e.g., a question), then, before responding, fetches pertinent information from a designated data source related to the query, and uses the combined content and query to guide the language model in formulating an appropriate response.

The power of copilots is in their adaptability, particularly their unparalleled ability to seamlessly and securely tap into both internal and external data sources. This dynamic, always-updated integration doesn’t just increase the accessibility and usability of enterprise knowledge, it improves the efficiency and responsiveness of businesses to ever-evolving demands.

Although there is much excitement for copilot pattern-based solutions, it’s important for businesses to carefully consider the design elements to design a durable, adaptable, and effective approach. How can AI developers ensure their solutions do not just capture attention, but also enhance customer engagement? Here are seven pillars to think through when building your custom copilot.

Retrieval: Data ingestion at scale

Data connectors are vital for businesses aiming to harness the depth and breadth of their data across multiple expert systems using a copilot. These connectors serve as the gateways between disparate data silos, connecting valuable information, making accessible and actionable in a unified search experience. Developers can ground models on their enterprise data and seamlessly integrate structured, unstructured, and real-time data using Microsoft Fabric.

For copilot, data connectors are no longer just tools. They are indispensable assets that make real-time, holistic knowledge management a tangible reality for enterprises.

Enrichment: Metadata and role-based authentication

Enrichment is the process of enhancing, refining, and valuing raw data. In the context of LLMs, enrichment often revolves around adding layers of context, refining data for more precise AI interactions, and data integrity. This helps transform raw data into a valuable resource.

When building custom copilots, enrichment helps data become more discoverable and precise across applications. By enriching the data, generative AI applications can deliver context-aware interactions.

LLM-driven features often rely on specific, proprietary data. Simplifying data ingestion from multiple sources is critical to create a smooth and effective model. To make enrichment even more dynamic, introducing templating can be beneficial. Templating means crafting a foundational prompt structure, which can be filled in real-time with the necessary data, which can safe-guard and tailor AI interactions.

The combined strength of data enrichment and chunking leads AI quality improvements, especially when handling large datasets. Using enriched data, retrieval mechanisms can grasp cultural, linguistic, and domain-specific nuances. This results in more accurate, diverse, and adaptable responses, bridging the gap between machine understanding and human-like interactions.

Search: Navigating the data maze

Advanced embedding models are changing the way we understand search. By transforming words or documents into vectors, these models capture the intrinsic meaning and relationships between them. Azure AI Search, enhanced with vector search capabilities, is a leader in this transformation. Using Azure AI Search with the power of semantic reranking gives users contextually pertinent results, regardless of their exact search keywords.

With copilots, search processes can leverage both internal and external resources, absorbing new information without extensive model training. By continuously incorporating the latest available knowledge, responses are not just accurate but also deeply contextual, setting the stage for a competitive edge in search solutions.

The basis of search involves expansive data ingestion, including source document retrieval, data segmentation, embedding generation, vectorization, and index loading to ensure that the results align closely with the user’s intent when a user inputs a query, that undergoes vectorization before heading to Azure AI Search for retrieving most relevant results.

Continuous innovation to refine search capabilities has led to a new concept of hybrid search. This innovative approach melds the familiarity of keyword-based search with the precision of vector search techniques. The blend of keyword, vector, and semantic ranking further improves the search experience, delivering more insightful and accurate results for end users.

Prompts: Crafting efficient and responsible interactions

In the world of AI, prompt engineering provides specific instructions to guide the LLM’s behavior and generate desired outputs. Crafting the right prompt is crucial to get not just accurate, but safe and relevant responses that meet user expectations.

Prompt efficiency requires clarity and context. To maximize the relevance of AI responses, it is important to be explicit with instructions. For instance, if concise data is needed, specify that you want a short answer. Context also plays a central role. Instead of just asking about market trends, specify current digital marketing trends in e-commerce. It can even be helpful to provide the model with examples that demonstrate the intended behavior.

Azure AI prompt flow enables users to add content safety filters that detect and mitigate harmful content, like jailbreaks or violent language, in inputs and outputs when using open source models. Or, users can opt to use models offered through Azure OpenAI Service, which have content filters built-in. By combining these safety systems with prompt engineering and data retrieval, customers can improve the accuracy, relevance, and safety of their application.

Learn More

Get started with prompt flow

Achieving quality AI responses often involves a mix of tools and tactics. Regularly evaluating and updating prompts helps align responses with business trends. Intentionally crafting prompts for critical decisions, generating multiple AI responses to a single prompt, and then selecting the best response for the use case is a prudent strategy. Using a multi-faceted approach helps AI to become a reliable and efficient tool for users, driving informed decisions and strategies.

User Interface (UI): The bridge between AI and users

An effective UI offers meaningful interactions to guide users through their experience. In the ever-evolving landscape of copilots, providing accurate and relevant results is always the goal. However, there can be instances when the AI system might generate responses that are irrelevant, inaccurate, or ungrounded. A UX team should implement human-computer interaction best practices to mitigate these potential harms, for example by providing output citations, putting guardrails on the structure of inputs and outputs, and by providing ample documentation on an application’s capabilities and limitations.

To mitigate potential issues like harmful content generation, various tools should be considered. For example, classifiers can be employed to detect and flag possibly harmful content, guiding the system’s subsequent actions, whether that’s changing the topic or reverting to a conventional search. Azure AI Content Safety is a great tool for this.

A core principle for Retrieval Augmented Generation (RAG)-based search experiences is user-centric design, emphasizing an intuitive and responsible user experience. The journey for first-time users should be structured to ensure they comprehend the system’s capabilities, understand its AI-driven nature, and are aware of any limitations. Features like chat suggestions, clear explanations of constraints, feedback mechanisms, and easily accessible references enhance the user experience, fostering trust and minimizing over-reliance on the AI system.

Continuous improvement: The heartbeat of AI evolution

The true potential of an AI model is realized through continuous evaluation and improvement. It is not enough to deploy a model; it needs ongoing feedback, regular iterations, and consistent monitoring to ensure it meets evolving needs. AI developers need powerful tools to support the complete lifecycle of LLMs, including continuously reviewing and improving AI quality. This not only brings the idea of continuous improvement to life, but also ensures that it is a practical, efficient process for developers.

Identifying and addressing areas of improvement is a fundamental step to continuously refine AI solutions. It involves analyzing the system’s outputs, such as ensuring the right documents are retrieved, and going through all the details of prompts and model parameters. This level of analysis helps identify potential gaps, and areas for refinement to optimize the solution.

Prompt flow in Azure AI Studio is tailored for LLMs and transforming LLM development lifecycle. Features like visualizing LLM workflows and the ability to test and compare the performance of various prompt versions empowers developers with agility and clarity. As a result, the journey from conceptualizing an AI application to deploying it becomes more coherent and efficient, ensuring robust, enterprise-ready solutions.

Unified development

The future of AI is not just about algorithms and data. It’s about how we retrieve and enrich data, create robust search mechanisms, articulate prompts, infuse responsible AI best practices, interact with, and continuously refine our systems.

AI developers need to integrate pre-built services and models, prompt orchestration and evaluation, content safety, and responsible AI tools for privacy, security, and compliance. Azure AI Studio offers a comprehensive model catalog, including the latest multimodal models like GPT-4 Turbo with Vision coming soon to Azure OpenAI Service and open models like Falcon, Stable Diffusion, and the Llama 2 managed APIs. Azure AI Studio is a unified platform for AI developers. It ushers in a new era of generative AI development, empowering developers to explore, build, test, and deploy their AI innovations at scale. VS Code, GitHub Codespaces, Semantic Kernel, and LangChain integrations support a code-centric experience.

Whether creating custom copilots, enhancing search, delivering call center solutions, developing bots and bespoke applications, or a combination of these, Azure AI Studio provides the necessary support.

Azure AI Studio

Your platform for developing generative AI solutions and custom copilots

Learn more about the power of Azure AI Studio

As AI continues to evolve, it is essential to keep these seven pillars in mind to help build systems that are efficient, responsible, and always at the cutting-edge of innovation.

Are you eager to tap into the immense capabilities of AI for your enterprise? Start your journey today with Azure AI Studio!

We’ve pulled together two GitHub repos to help you get building quickly. The Prompt Flow Sample showcases prompt orchestration for LLMOps—using Azure AI Search and Cosmos DB for grounding. Prompt flow streamlines prototyping, experimenting, iterating, and deploying AI applications. The Contoso Website repository houses the eye-catching website featured at Microsoft Ignite, featuring content and image generation capabilities, along with vector search. These two repos can be used together to help build end-to-end custom copilot experiences.

Learn more

- Build with Azure AI Studio

- Join our SMEs during the upcoming Azure AI Studio AMA session – December 14th, 9-10am PT

- Azure AI SDK

- Azure AI Studio documentation

- Introduction to Azure AI Studio (learn module)

The post The seven pillars of modern AI development: Leaning into the era of custom copilots appeared first on Microsoft Azure Blog.