28

Sep

What’s new in Data & AI: Expanding choices for generative AI app builders

Generative AI is no longer just a buzzword or something that’s just “tech for tech’s sake.” It’s here and it’s real, today, as small and large organizations across industries are adopting generative AI to deliver tangible value to their employees and customers. This has inspired and refined new techniques like prompt engineering, retrieval augmented generation, and fine-tuning so organizations can successfully deploy generative AI for their own use cases and with their own data. We see innovation across the value chain, whether it’s new foundation models or GPUs, or novel applications of preexisting capabilities, like vector similarity search or machine learning operations (MLOps) for generative AI. Together, these rapidly evolving techniques and technologies will help organizations optimize the efficiency, accuracy, and safety of generative AI applications. Which means everyone can be more productive and creative!

We also see generative AI inspiring a wellspring of new audiences to work on AI projects. For example, software developers that may have seen AI and machine learning as the realm of data scientists are getting involved in the selection, customization, evaluation, and deployment of foundation models. Many business leaders, too, feel a sense of urgency to ramp up on AI technologies to not only better understand the possibilities, but the limitations and risks. At Microsoft Azure, this expansion in addressable audiences is exciting, and pushes us to provide more integrated and customizable experiences that make responsible AI accessible for different skillsets. It also reminds us that investing in education is essential, so that all our customers can yield the benefits of generative AI—safely and responsibly—no matter where they are in their AI journey.

We have a lot of exciting news this month, much of it focused on providing developers and data science teams with expanded choice in generative AI models and greater flexibility to customize their applications. And in the spirit of education, I encourage you to check out some of these foundational learning resources:

For business leaders

- Building a Foundation for AI Success: A Leader’s Guide: Read key insights from Microsoft, our customers and partners, industry analysts, and AI leaders to help your organization thrive on your path to AI transformation.

- Transform your business with Microsoft AI: In this 1.5-hour learning path, business leaders will find the knowledge and resources to adopt AI in their organizations. It explores planning, strategizing, and scaling AI projects in a responsible way.

- Career Essentials in Generative AI: In this 4-hour course, you will learn the core concepts of AI and generative AI functionality, how you can start using generative AI in your own day-to-day work, and considerations for responsible AI.

For builders

- Introduction to generative AI: This 1-hour course for beginners will help you understand how LLMs work, how to get started with Azure OpenAI Service, and how to plan for a responsible AI solution.

- Start Building AI Plugins With Semantic Kernel: This 1-hour course for beginners will introduce you to Microsoft’s open source orchestrator, Semantic Kernel, and how to use prompts, semantic functions, and vector databases.

- Work with generative AI models in Azure Machine Learning: This 1-hour intermediate course will help you understand the Transformer architecture and how to fine-tune a foundation model using the model catalog in Azure Machine Learning.

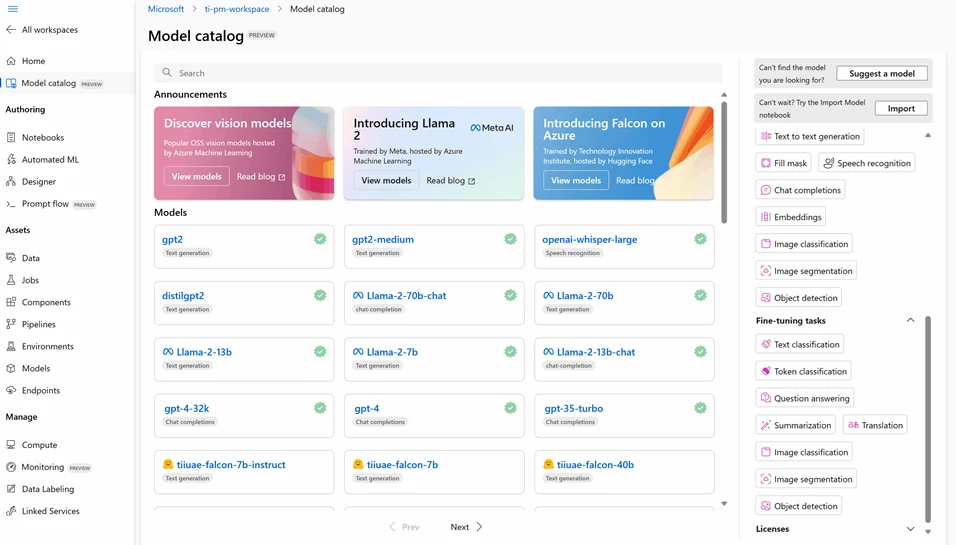

Access new, powerful foundation models for speech and vision in Azure AI

We’re constantly looking for ways to help machine learning professionals and developers easily discover, customize, and integrate large pre-trained AI models into their solutions. In May, we announced the public preview of foundation models in the Azure AI model catalog, a central hub to explore collections of various foundation models from Hugging Face, Meta, and Azure OpenAI Service. This month brought another milestone: the public preview of a diverse suite of new open-source vision models in the Azure AI model catalog, spanning image classification, object detection, and image segmentation capabilities. With these models, developers can easily integrate powerful, pre-trained vision models into their applications to improve performance for predictive maintenance, smart retail store solutions, autonomous vehicles, and other computer vision scenarios.

In July we announced that the Whisper model from OpenAI would also be coming to Azure AI services. This month, we officially released Whisper in Azure OpenAI Service and Azure AI Speech, now in public preview. Whisper can transcribe audio into text in an astounding 57 languages. The foundation model can also translate all those languages to English and generate transcripts with enhanced readability, making it a powerful complement to existing capabilities in Azure AI. For example, by using Whisper in conjunction with the Azure AI Speech batch transcription application programming interface (API), customers can quickly transcribe large volumes of audio content at scale with high accuracy. We look forward to seeing customers innovate with Whisper to make information more accessible for more audiences.

Operationalize application development with new code-first experiences and model monitoring for generative AI

As generative AI adoption accelerates and matures, MLOps for LLMs, or simply “LLMOps,” will be instrumental in realizing the full potential of this technology at enterprise scale. To expedite and streamline the iterative process of prompt engineering for LLMs, we introduced our prompt flow capabilities in Azure Machine Learning at Microsoft Build 2023— providing a way to design, experiment, evaluate, and deploy LLM workflows. This month, we announced a new code-first prompt flow experience through our SDK, CLI, and VS Code extension available in preview. Now, teams can more easily apply rapid testing, optimization, and version control techniques to generative AI projects, for more seamless transitions from ideation to experimentation and, ultimately, production-ready applications.

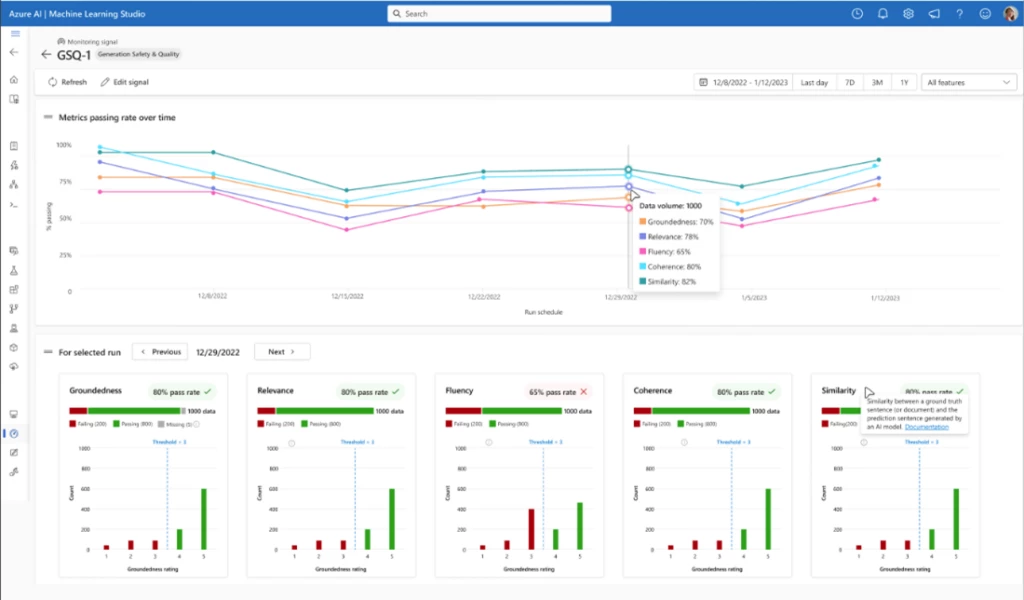

Of course, once you deploy your LLM application in production, the job isn’t finished. Changes in data and consumer behavior can influence your application over time, resulting in outdated AI systems, which negatively impact business outcomes and expose organizations to compliance and reputational risks. This month, we announced model monitoring for generative AI applications, now available in preview in Azure Machine Learning. Users can now collect production data, analyze key safety, quality, and token consumption metrics on a recurring basis, receive timely alerts about critical issues, and visualize the results over time in a rich dashboard.

Enter the new era of corporate search with Azure Cognitive Search and Azure OpenAI Service

Microsoft Bing is transforming the way users discover relevant information across the world wide web. Instead of providing a lengthy list of links, Bing will now intelligently interpret your question and source the best answers from various corners of the internet. What’s more, the search engine presents the information in a clear and concise manner along with verifiable links to data sources. This shift in online search experiences makes internet browsing more user-friendly and efficient.

Now, imagine the transformative impact if businesses could search, navigate, and analyze their internal data with a similar level of ease and efficiency. This new paradigm would enable employees to swiftly access corporate knowledge and harness the power of enterprise data in a fraction of the time. This architectural pattern is known as Retrieval Augmented Generation (RAG). By combining the power of Azure Cognitive Search and Azure OpenAI Service, organizations can now make this streamlined experience possible.

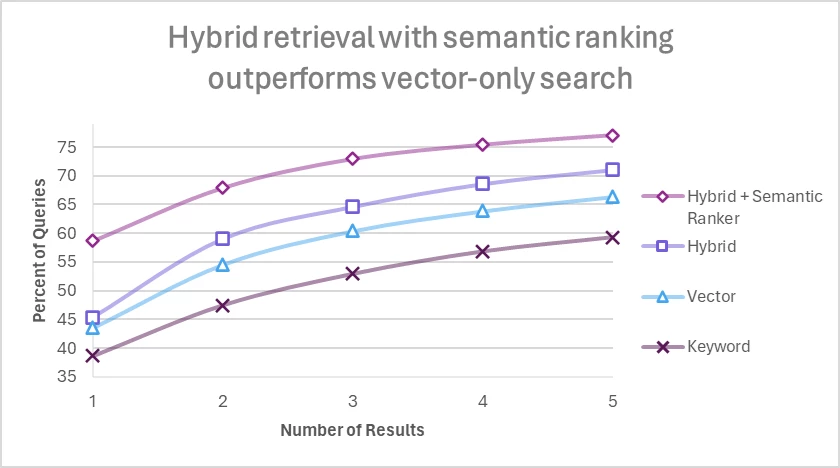

Combine Hybrid Retrieval and Semantic Ranking to improve generative AI applications

Speaking of search, through extensive testing on both representative customer indexes and popular academic benchmarks, Microsoft found that a combination of the following techniques creates the most effective retrieval engine for a majority of customer scenarios, and is especially powerful in the context of generative AI:

- Chunking long form content

- Employing hybrid retrieval (combining BM25 and vector search)

- Activating semantic ranking

Any developer building generative AI applications will want to experiment with hybrid retrieval and reranking strategies to improve the accuracy of outcomes to delight end users.

Improve the efficiency of your Azure OpenAI Service application with Azure Cosmos DB vector search

We recently expanded our documentation and tutorials with sample code to help customers learn more about the power of combining Azure Cosmos DB and Azure OpenAI Service. Applying Azure Cosmos DB vector search capabilities to Azure OpenAI applications enables you to store long term memory and chat history, improving the quality and efficiency of your LLM solution for users. This is because vector search allows you to efficiently query back the most relevant context to personalize Azure OpenAI prompts in a token-efficient manner. Storing vector embeddings alongside the data in an integrated solution minimizes the need to manage data synchronization and helps accelerate your time-to-market for AI app development.

Embrace the future of data and AI at upcoming Microsoft events

Azure continuously improves as we listen to our customers and advance our platform for excellence in applied data and AI. We hope you will join us at one of our upcoming events to learn about more innovations coming to Azure and to network directly with Microsoft experts and industry peers.

- Enterprise scale open-source analytics on containers: Join Arun Ulagaratchagan (CVP, Azure Data), Kishore Chaliparambil (GM, Azure Data), and Balaji Sankaran (GM, HDInsight) for a webinar on October 3rd to learn more about the latest developments in HDInsight. Microsoft will unveil a full-stack refresh with new open-source workloads, container-based architecture, and pre-built Azure integrations. Find out how to use our modern platform to tune your analytics applications for optimal costs and improved performance, and integrate it with Microsoft Fabric to enable every role in your organization.

- Microsoft Ignite is one of our largest events of the year for technical business leaders, IT professionals, developers, and enthusiasts. Join us November 14-17, 2023 virtually or in-person, to hear the latest innovations around AI, learn from product and partner experts build in-demand skills, and connect with the broader community.

The post What’s new in Data & AI: Expanding choices for generative AI app builders appeared first on Azure Blog.